“Nature does not hurry, yet everything is accomplished.” – Lao Tzu

In part one and part two, we’ve explored how biological agents — ants, birds, bees — operate without central control, memory, or explicit goals. Their power lies not in cleverness but in coordination.

In this post, we confront a deeper difference: not one of structure, but of time.

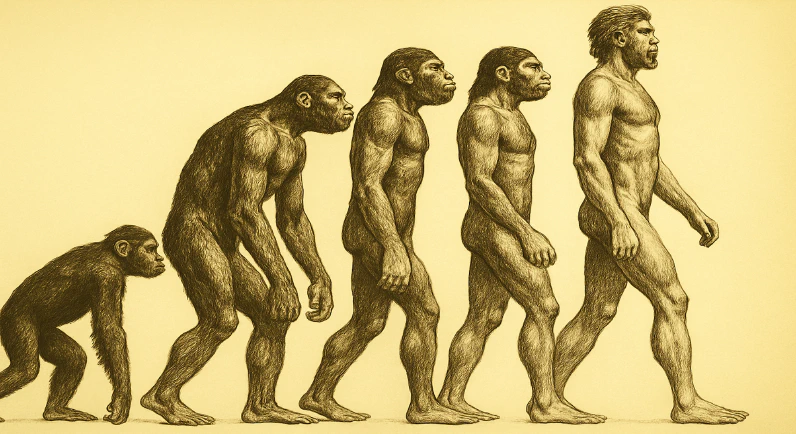

Nature doesn’t train agents, it evolves them.

Nature Plays the Long Game

A fox doesn’t learn to fear cliffs by falling off one. That risk aversion is baked in, shaped by millennia of deaths that didn’t pass on their genes. A bee doesn’t calculate the angle of the sun before flying toward a flower. Its navigation is instinctive, hardwired through natural selection.

In these systems:

- Learning is slow and happens across generations

- Adaptation is inherited, not inferred

- Risk is irreversible — one mistake can be fatal

That’s the evolutionary contract: you don’t get a second try, but your genes might.

Evolution in popular culture

Here are some books and movies that can help us understand the vast periods of time involved in evolution:

- Dragon's Egg by Robert L Forward is a hard SF novel concerning the cheela, life found on a neutron star that lives a million times faster than us. The orbiting humans watch as the species evolves and eventually surpasses the ‘gods in the sky’;

- In the movie Evolution starting X-Files’ David Duchovny, the aliens visit us and advance quickly on our planet;

- No one writes hard SF like Greg Egan, a recluse from Perth, Western Australia who writes stories that span billions of years. Diaspora follows a few species from proto-mammalian through to present day to times approaching the heat death of the universe. Kind of wild reading a story that occurred a few 100,000 years before homo sapiens;

- Atheist Richard Dawkins pointed out in his landmark books like The Selfish Gene and The Blind Watchmaker that the saeculum (a Latin term for 80-90 years, or the lifetime of a person) is nothing compared to billions of years of evolution; we really can’t comprehend change over thousands of our generations (although we can observe fruit flies - Drosophila melanogaster - in the lab with generations of 10-14 days, or note adaptation of wildlife behaviour in urban environments). Too bad Richard is such a pompous Dick.

AI Learns in Moments

Modern AI agents are trained rapidly. They iterate on failure. In a reinforcement learning loop, an agent might “die” thousands of times in simulation before it finds an optimal strategy. LLMs are fine-tuned in hours or days, absorbing billions of tokens. Tool-using AI agents like Auto-GPT can hallucinate, crash, and reboot — and we call that progress.

In these systems:

- Learning is fast, sometimes near-instant

- Risk is virtual, and can be undone

- Adaptation is internal, not embodied or passed on

We have built machines that learn like no organism ever could.

The Cost of Instant Learning

Instant learning creates structural differences that go beyond speed.

Biological systems are:

- Cautious by necessity

- Built for resilience, not just efficiency

- Biased toward generalisation, not overfitting. We end up with vestiges: junk DNA, an appendix, or wings on flightless birds.

AI agents, by contrast, are:

- Optimised for local objectives

- Often fragile outside their training distribution

- Prone to pursue unintended strategies when given the freedom

Nature learns slowly but safely (for the DNA, at least). AI learns fast, but not always wisely.

Fragility vs Antifragility

AI systems often appear capable — until they’re not. They can outperform humans in narrowly defined tasks, but fall apart when pushed beyond their training boundaries.

By contrast, nature doesn’t just resist shocks — it learns from them. Biological systems are often antifragile, a term popularised by Nassim Nicholas Taleb in his 2012 book of that name:

“Some things benefit from shocks; they thrive and grow when exposed to volatility, randomness, disorder, and stressors.”

Evolution is antifragile by design. It doesn’t prevent failure, it uses it. The death of the unfit strengthens the species. The disturbance of an ecosystem opens niches. Even our immune system adapts by exposure to threat.

AI agents rarely benefit from failure unless that is part of their training. They crash when they fail rather than mutate, or worse, they silently deliver plausible nonsense.

Until we design AI systems that can absorb volatility and improve from real-world uncertainty — not just curated feedback — we remain in fragile territory.Because AI systems are trained in abstracted environments, they may never encounter real-world failure. They haven’t evolved with skin in the game. AI hasn’t inherited scars.

That can make them brilliant at benchmark tasks, and brittle in the wild.

This is why evolution matters. It encodes not just success, but survival. It’s not enough to solve a problem; you have to survive long enough to do it again.

Coming Next: Purpose vs Emergence

In Part 4, we’ll return to the role of control. Who decides what an agent wants? How do natural systems find purpose through interaction, and how does that differ from our AI agents with their engineered objectives?

And what might happen if we let goals emerge instead of defining them?